The collaboration between XR4Europe and XRMust opens, in this 2024, with a first interview dedicated to one of our favorite artists. We are talking about Jason Moore, who, with his The MetaMovie team, created Alien Rescue, a production at the forefront of the immersive industry for its narrative and entertainment potential, but also for the way it approaches virtual production, audiences, and the possibilities offered by the metaverse today.

When we came across The MetaMovie Presents: Alien Rescue at the Venice Film Festival almost four years ago, we already knew that something new was about to make its way into the world of immersive storytelling and indelibly leave its mark.

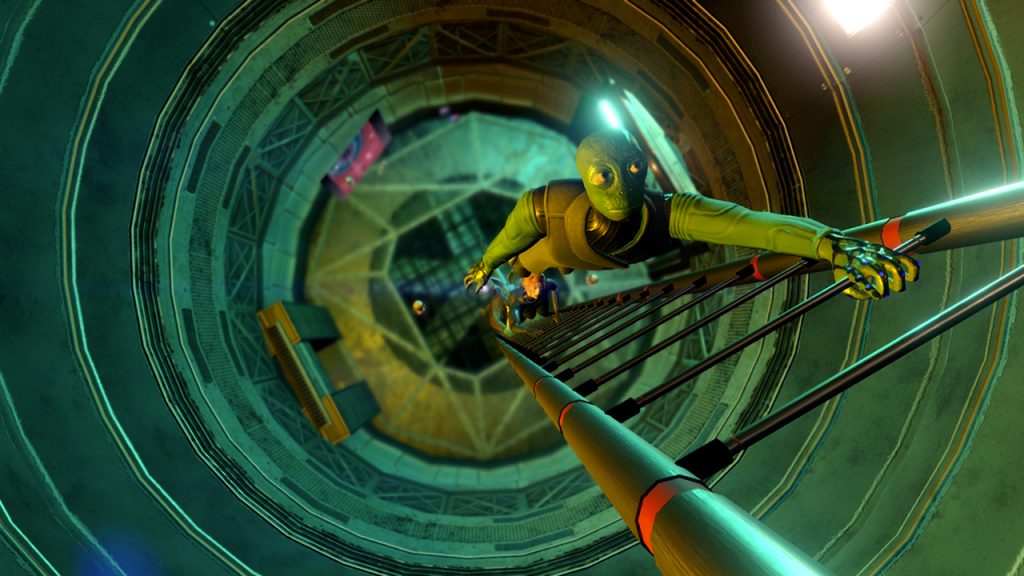

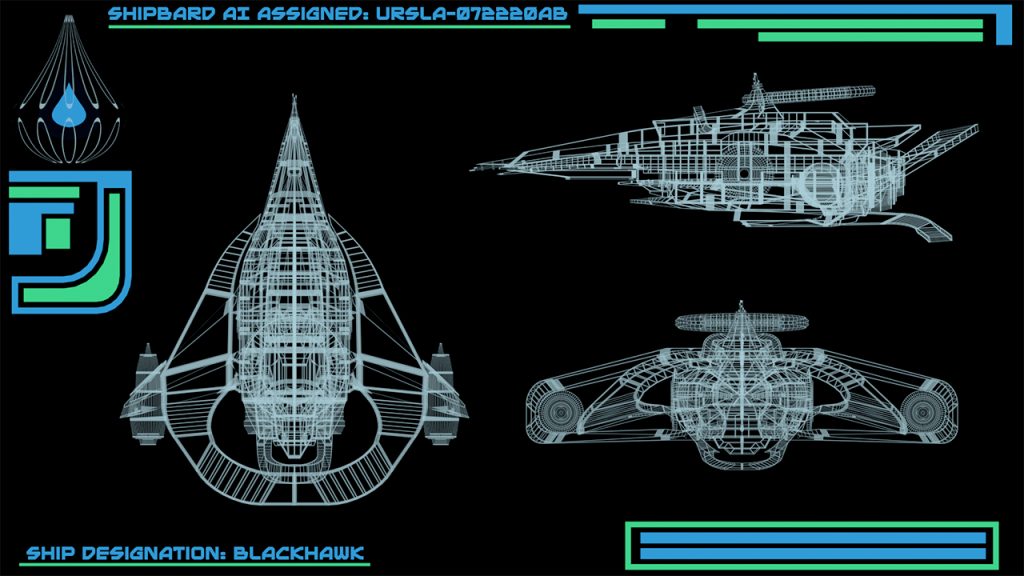

This Sci-fi VR experience takes place on the social VR platform Resonite, and one or more paying users can participate as “heroes”. Accompanied by flesh-and-blood actors playing various characters in the story and an audience of 15 people in the role of silent Eyebots, they are to carry out a rescue mission inside a huge spaceship on the fictional planet of Palnetter.

The project, born as early as 2018 out of the interest in VR of Jason Moore, director and CEO of The MetaMovie, represents today, for its experimental nature, one of the longest-running, most elaborate and innovative examples of cinematic production in virtual reality. With its two-and-a-half years of live performances-still ongoing-The MetaMovie Presents: Alien Rescue stands proudly at the intersection of virtual reality, film and role playing, and is a great example of a work that has been able to engage audiences and bring virtual reality to those who do not economically and physically have the opportunity to experience it.

Creating Reality: XR Interview with Jason Moore

Last March 5, XR4Europe, in collaboration with XRMust, took us viewers, as well as many industry experts, on a journey/interview to discover how Jason and his team “design, create, and iterate their immersive cinematic production inside platforms like Resonite“.

Between practical examples of coding, car chases, and laser challenges worthy of the best heist movies, the enthusiastic moderation of Michael Barngrover helped Jason Moore, Alien Rescue developer Zyzyl and actors Craig Woodword, Marinda Botha, and Kenneth Rogeau to reflect on several topics: new directions for immersive production, the role of coding, the relationship between actors, storytelling and improvisation, but also the extraordinary tool of a special multicam created by Zyzyl themselves which under the watchful eye of Carlos Austin has become a very powerful tool to transform a virtual story that few can physically access into an immersive cinematic experience that opens the door to new distribution possibilities and new audiences.

Working “on-site”: the potential of connecting remotely in a common space

The demonstration speaks for itself: Jason and his team were able to recreate in a matter of minutes, live and while explaining it to the audience, a car chase (and a shootout, too, with unhappy consequences for the director…) on the set they use for Alien Rescue, simply using the intuitive tools offered by Resonite.

This is not the first time that performing-arts artists told us about how effective co-creation directly on the platform is (we will return to this topic in the incoming weeks with our interview on The Saga of Sage), but the work of The MetaMovie team is a clear confirmation.

Not only being able to be physically in the same place, however virtually, overcomes geographical barriers between creatives working at the experience. It also allows to optimize and speed up the creation process exponentially.

The whole team can see in real time the results of a change to lights or props. They can work simultaneously in the same space on different aspects of the work and then confront each other on the spot about their effectiveness. They can consult on each other’s needs as early as the creation stage. And again, to mention another example, when rehearsing a scene, the director does not need to manipulate equipment, find locations, and physically manage the scene, with economic and time costs. They can simply scale themselves bigger so to have control over the scene and ease the process of choreographing it, observing everything from above as if they had a miniature location and toy soldiers (the actors) to place.

In short, a series of opportunities that help stage managing incredibly and, at the same time, facilitate immediate evaluation of results.

Protoflux: using coding to give more agency to the audience

Protoflux, Resonite’s node-based scripting language, which can be manipulated directly in the 3D space, is one of the platform’s most useful tools. Although many, as Zyzyl reminds us, continue to prefer text-based coding-which is likely to come to Resonite in the future anyway-the visual scripting language allows for immediate feedback to the commands, with the possibility to immediately verify the correctness of the processand also whether the result is aesthetically as intended.

Again, operating directly in the platform optimizes production timelines, but that is not the only use Jason Moore and his team make of the tool.

In fact, the essential aspect where Protoflux proves its usefulness is in interacting with audiences outside the virtual world, specifically The MetaMovie followers on Twitch.

For years already, there has been a widespread trend of watching people play video games when we cannot play them ourselves. This phenomenon is even more relevant in the immersive field as not everyone has the means to equip themselves with the necessary tools to access virtual worlds, even more so when we’re talking about social VR worlds such as Resonite, which require high-end PCVR and do not support stand-alone headsets.

Moore, since the origins of his work with The MetaMovie, has shown a very significant interest for connecting virtual worlds with IRL worlds. This is for two primary reasons, as he explains in the interview: first, because it is difficult to share the excitement that many feel about VR without a practical method of showing it to those without a headset. Second, because opening up to streaming services expands the possibilities of finding new audiences and consequently concretely generates new revenue.

With Protoflux, the online-connected user can interact with the virtual experience through the use of commands that make things happen in the virtual world as the story unfolds. Explosions, music, special effects-anything can be generated without the actors’ knowledge.

The agency of those who only follow the show via streaming increases dramatically, prompting many to return to try new interactions or perhaps even buy a ticket as a hero to experience their presence in the story exponentially.

Giving viewers agency while staying true to the script. Also known as: on the immense skills of immersive actors

Not sure about you, but when I see an actor who can handle a “live” acting situation, I always wonder if they’re human or if their skills come directly from the wand of Harry Potter. That to say, I’ve always watched with infinite admiration those professionals at work-I cannot recite a script, let alone improvise- it feels like magic to me.

The remarkable thing about The MetaMovie (which we have also found in other brilliant examples of immersive live theater, such as Finding Pandora: X, or in our friends at Ferryman Collective) is that its actors are even better than that. They find themselves sharing a scene with “heroes” who are given maximum agency- an agency which I bet leads to the most unexpected responses and behaviors. Things that inevitably put the script on hold for several minutes.

The skill of these actors is thus to be found not only in handling the response to the hero’s “interpretive” choice, but also in getting the story back on the correct tracks; to go from the opening scene to the final one without getting completely lost along the way.

We have like 100-page script for Alien Rescue that the actors have all memorized inside and outside down. And anytime one of our five heroes opens their mouth to say something, well, that’s going to interrupt the narrative process. Anytime they talk, we pivot out of the structured script and into an improvised scenario with that person. […] We always steer ourselves back to the script, once that improvised moment has run its course, because of course we want to keep the linear story going on. So the big challenge with this type of interactive activity is […] we don’t want this to be an open improv session. But we also want to allow for our heroes to really feel like they’re part of the story. And this is where the talent of these actors come in.

Jason Moore

A talent specific to this wonderful community of artists where everyone is learning through experimentation, for still it is a new art form.

Traditional switcher made virtual: on the narrative power of the multicam

We conclude this recap of the “Creating Reality: XR Interview with Jason Moore” event with the last macro-topic addressed by Jason and his team, namely that of multicam.

Although Resonite offers among its tools a streamer camera with a good interface, Moore, who has a film and television background and for many years has worked precisely with multicam technology both in studio and remotely, has been trying for some time to enable a multicam system in Resonite.

This became possible through Zyzyl, who managed, despite the complexity of the project, to develop a system which has now become one of the main tools used by The MetaMovie team to “share the magic of VR with the outside world, in a way that a single cam just can’t really do“.

Indeed, a Twitch user today has the opportunity, thanks to the skillful direction of Carlos Austin, the multicam director of The MetaMovie, to observe what happens during an Alien Rescue show from different angles, uncovering various perspectives (and even some characters’ secrets). This is certainly a much more cinematic way of presenting the story, definitely more exciting than the one a single-camera POV or a traditional over-the-shoulder shot might offer.

Alien Rescue is off and running with new shows, the first one next March 16. You can buy tickets from The MetaMovie website and choose to finally be the hero of your own story and the protagonist of your own movie.

But if you don’t have the equipment, no fear: as we have mentioned several times, The MetaMovie is also on Twitch and even from there we can influence the narrative and surprise the cast a little bit.

Come join the show, fellow bots.

Leave a Reply

You must be logged in to post a comment.