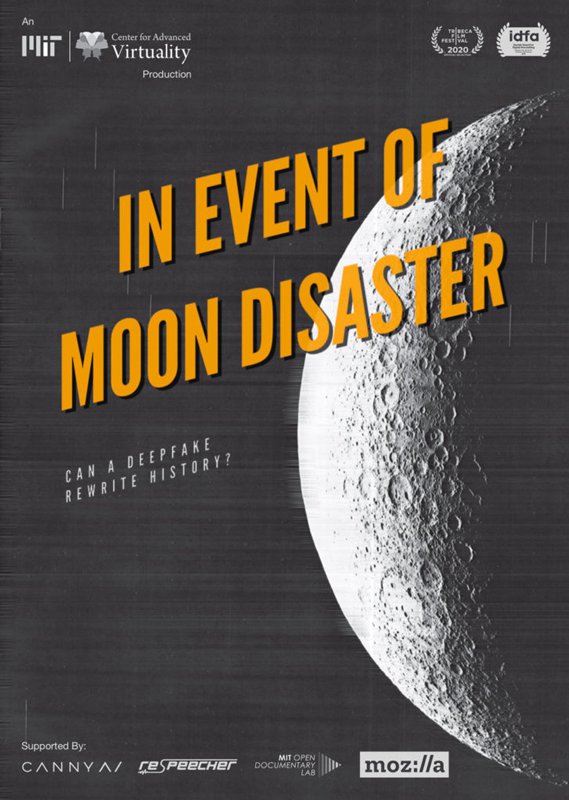

“In Event of Moon Disaster” was recently selected for the Tribeca Film Festival’s Virtual Arcade. A fascinating and surprising experience, In Event of Moon Disaster premiered at the IDFA DocLab and is a reflection on the power of deepfakes and the subtle role of misinformation in our society.

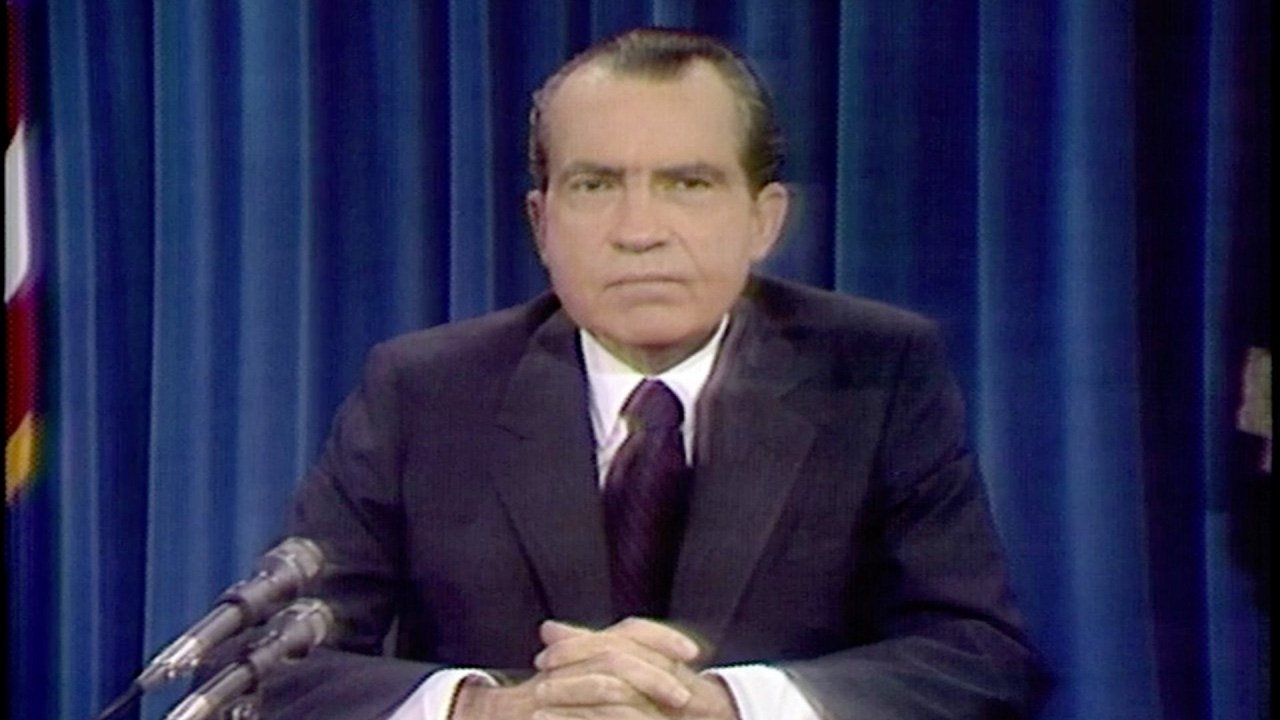

In Event of Moon Disaster illustrates the possibilities of deepfake technologies by reimagining this seminal event: what if the Apollo 11 mission had gone wrong and the astronauts hadn’t been able to return home? A contingency speech for this possibility was prepared for, but never delivered by President Nixon—until now.

Although the Tribeca Film Festival has made its 360 Cinema works available to the public through a collaboration with Oculus that we talked about here, the current emergency situation and the way the Virtual Arcade is built have made it impossible to share its lineup in the same way.

Fortunately, we had the chance to speak with Halsey Burgund, co-director of In Event of Moon Disaster along with Francesca Panetta, and find out more about the origins of this piece and its interesting educational applications.

In Event of Moon Disaster: the origins

About a year ago we came up with the idea behind “In Event of Moon Disaster”. A bunch of us were in a room together: myself, Francesca (ed Panetta, co-director of the piece) and a few other journalists who were interested in technology and how it can be used in different ways.

We were brainstorming about different ideas and possibilities when deepfakes came to our mind. It was something we were aware of, and there were concerns about it and about the fact that almost nothing was done to control its use. Then the 50th anniversary of the moon landing came up – and, I am a huge fan of space exploration and of everything it represents. It was at that point that we remembered the contingency speech written for President Nixon (to be delivered in case the mission failed and the astronauts died on the moon). And it hit us: Let’s make President Nixon actually deliver this speech and build an alternate history to show the power that deepfakes bring to the misinformation ecosystem.

The contingency speech is such a beautiful one, wonderfully written, and it talks not only about individual astronauts and their sacrifice, but expands to the idea of exploration and mankind… how we will not be deterred. A hopeful message that rang true with us in a lot of ways. Obviously if this speech had happened in real life, then it would have been a major tragedy, but it did not. We all know things did not go that way. So, highlighting that specific speech does not only reaffirm that it did not happen, but it also gives you the chance to emphasize our desire to explore, a desire that characterizes mankind. We thought the speech would be an effective vehicle for us to communicate the dangers of misinformation through both aesthetic experience and more direct pedagogy.

On the power of misinformation

Misinformation is not new. As soon as there was information, as soon as there was data to report on, there was a way to make those data seem different. Photoshop, for example, has been around for decades and it allows you to use real photos manipulated to convey something non-factual. All these cheap fakes – reversing videos, slowing down videos, wrong timings, and so on – we used them in our film: we twisted archive footage around, creating something that presents major inaccuracies on purpose. This to show that our project is not only about deepfakes, but it is also about easier ways to spread misinformation, ways that don’t require massive technology. You slow down a video of Nancy Pelosi, you make it sound like she is drunk and therefore she cannot be trusted. We want to shed a light on this phenomenon and its implications and so we put together a team made up of different minds – media researchers, historians, journalists, technical experts, and so on – as a way to tackle all this from different point of views: from a social perspective, from a philosophical perspective, the point of view of those interested in technological progress and so on.

Deepfakes and synthetic media can be used for good just as much as for bad. But the big question they leave you with, at the end of the day, is the same: are we not going to be able to ever know what the truth is, again? Are there always going to be some shadows of doubt over absolutely everything? Are people just going to give up and become apathetic and not except anything as true other than what they have personally experienced?

Working on In Event of Moon Disaster

Creating In Event of Moon Disaster took a lot of effort. Of course, the deepfake was a big part of it, but not the whole part. The rest of the film took a lot of time with editing and, as always, we did not have all the funding we would have liked to have. As for the deepfake itself, it took about four months to complete.

We worked with two companies: Canny AI for the visual elements, and Respeecher for the synthetic speech. We really wanted to do the best possible job with the available technology, because we felt that part of the piece was to give a demonstration of the best technology that was out there at the time. I say “at the time” because things have gotten better since. And indeed, it has become clear to us that the amount of effort it took us to create something of that quality – whether you think it’s a high or a low quality – will be reduced on a monthly basis. As we move forward, new technologies will be available, and they will make the production of such things easier and easier for larger groups of people. Democratization of technology at a high level is a good thing, but when it comes to technologies like these, it just opens them up for more manipulative intentions.

An anecdote from our actual experience: when we started working with Canny AI on the visual elements, they gave us explicit instructions as to what they needed from us and told us that the target video (the video of Nixon that we wanted to manipulate) had to have certain characteristics. It basically needed to be a still shot of Nixon talking. No close ups, and motion. This, to make the result more convincing. So, we found a different video from the one we were originally thinking of usingwhere the scene started at desk size and then zoomed in on his face.

But then, a month later, the company tried to apply modifications to the video we originally wanted to use… and it worked perfectly! This made it possible for us to add a more dynamic element to the speech itself and therefore to make it more believable.

In other words, just over the course of a month or two, that technology that Canny AI developed improved significantly. And, since then, the technology has got much, much better… and much, much easier to use! I’m quite convinced that if we were to do In Event of Moon Disaster today, we would have results not only more quickly, but also that looked even more convincing!

The one thing it would not change is the fact that the source material that we have is 50 years old and that creates lots of challenges. Take the audio of President Nixon: there is plenty of recordings of him, but it’s all done in random different situations, with different microphones and settings and that is very problematic and makes our work really challenging. There are advances in noise reduction, and advances in frequency analysis, but I think that that technology has not improved quite as dramatically as some of the deepfake production stuff has.

Learning from experience: the International Documentary FilmFestival Amsterdam

We were surprised by how our audience at IDFA reacted. As artists, we really wanted to encapsulate our installation in a way that felt immersive and that did not feel like we wanted to impose education on people. We wanted them to have an experience: they walk into this room, they sit down on the couch, they find leaflets with clever advertisements about deepfake: “Tired of history telling you what happened? Try Deepfake!”… Everything to convey the idea that what you were experiencing was realistic but not actually real. And yet, many people were confused by the video and by the whole experience. The fact that it was a deepfake was not crystal clear to everybody. Some people thought the video of Nixon was real but it had never aired, some that we wrote the speech (and actually congratulated us for it!), others did not even know who President Nixon was. All things we had not considered and that we tried to explain to our audience after the experience itself. So, most of these people still got the message after we talked to them, but how many folks did walk away without talking to us and therefore without getting the message we were trying to convey? A big lesson for us, for when we are thinking about future presentations.

Our piece was supposed to be at Tribeca last week and we were thinking a lot about how to manage some of those onboarding / offboarding stuff. We’re still sticking with the idea of encapsulating the aesthetic experience and then pulling back the curtain a bit, in particular through the forthcoming website, and say “Ok, that was not real, despite what you felt emotionally when you experienced it. This is how we made it, this is what a deepfake is”. We hope to have the opportunity to do exactly that in future installations.

On the educational relevance of IN EVENT OF MOON DISASTER

We brought on to the team an education producer, Joshua Glick, who is working on a curriculum for college students in media literacy where this installation will be the infrastructure around which media literacy is taught. We are working actively on that because we do think that there is a lot to do and gain from taking it into a rigorous academic setting.

This is important information that we need to talk about, but talking about it in the context of an actual work of art – which is also an immersive one – could have a much more significant impact than simply talking about it in the abstract. That is why we want to work in this way with this film, and that is also why Scientific American is producing a short documentary about the piece. It is a documentary about deepfakes and how you create them, but it is done in the context of our piece; how we made it, what the challenges were etc.Something that, hopefully, can make In Event of Moon Disaster even more impactful!

In event of Moon disaster is an immersive art project directed by Francesca Panetta and Halsey Burgund and produced by MIT Center for Advanced Virtuality.

Visit the official website to find out more about deepfakes and their impact on our society and discover the piece on XRMust database at this link.

Leave a Reply

You must be logged in to post a comment.